Get free samples written by our Top-Notch subject experts for taking Online Assignment Help services in UK.

A company named Quantum Investments Ltd. is present in the UK and it is a company for financial investments. Quantum Investments Ltd resells financial products like insurance and loans and they study the market. Quantum Investments Ltd. has an IT team that looks after the servers of the company present at the head office located in Bristol. There are a total of sixteen servers of the company and fourteen of these sixteen servers host applications that are used by the company like Accounts software.

The web services of the organization are hosted on the other two servers of the organization. The number of PC systems of the company is 110 and there are 40 staff members who works on the pc through VPN to the cisco ASA firewalls. The company is looking to upgrade its IT infrastructure and they have an intention of virtualizing the thirteen application servers and running them using a cloud environment. The company wants to host the web services in the cloud and wants the web service to provide auto scaling characteristics

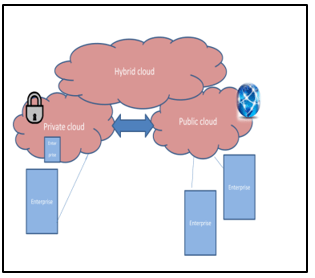

A hybrid cloud is formed by combining private and public clouds and it is used for performing certain tasks within an organization. The cost of implementing and using public cloud is less compared to private clouds and their scalability is greater than the scalability of private clouds. The private cloud hosted in a hybrid cloud is used for important applications while the public cloud is used for data and applications that are not critical in nature. Various cloud providers can form a team and combine public and private services and there can be different ways in which Hybrid cloud models can be implemented [12].

The different cloud providers have the ability of providing a hybrid package that is complete and organizations that have their private cloud can take the support of public cloud services which is combined into their infrastructure. The scalability of hybrid cloud models is high and this model is less costly compared to the other cloud services. The level of security of hybrid cloud models is greater than other cloud models like public or private cloud services. The Hybrid Cloud service is more flexible than the other cloud services.

The physical computing features of an organization are replaced by cloud computing with a scalable and flexible Internet and virtualization method. Organizations pay considerable sum of money using the assets for increasing storage capacity and the processing needs of the companies in order to save assets with the help of cloud computing.

Hybrid cloud is formed by the combination of public clouds and private clouds and it is found in private clouds that the infrastructure, networking and services are possessed by an organization and these are internally provided in the organization [11]. Although the initial costs of private clouds are high, the security of private clouds is very good and they are usually organized by the organization or a third party. Only a single organization along with the third party can have the permission for accessing the cloud, to use the resources of the cloud. It is possible to customize the storage, compute and networking portions that are satisfactory for a particular organization using a private cloud.

Get assistance from our PROFESSIONAL ASSIGNMENT WRITERS to receive 100% assured AI-free and high-quality documents on time, ensuring an A+ grade in all subjects.

Figure 1: Hybrid Cloud architecture

Public clouds are different from private clouds and the services and networking infrastructure of a public cloud are possessed by a service provider and they can be accessed using the internet. The security of public clouds is not as good as the security of private clouds. It resources are given as service to organizations using a public internet. Both processing and data are performed on the public clouds and no “on- premise integration” is provided in public clouds [9]. The difference between these clouds and hybrid clouds is that the users and the organization never get to know the place where their data is getting stored.

Due to the rendering of resources on the demand of the organization the public clouds are preferred by many companies. Hybrid cloud infrastructure is not needed in small and medium sized companies since the cost of implementing hybrid cloud in such organizations is way too high. These organizations prefer to rent the resources present in the cloud and gain access to services that satisfy these companies and make payments for the resources used by them. There is both secured and unsecured data that is present in a company and a public or private cloud infrastructure is not enough to manage this data. Hybrid cloud infrastructure is recommended as a solution for these companies.

Hybrid clouds are preferred by companies due to the reduced cost of such clouds asnd for other reasons as well. Some of the resources are handled in an organization within a hybrid cloud or these resources can be hosted in a private cloud whereas the remaining resources of the cloud can be provided by public cloud services. The advantage of a hybrid cloud is having on-premises, infrastructure that are private and which can be accessed directly [8]. The latency is lowered by using hybrid clouds and the time of access is reduced by using hybrid clouds as compared to “public cloud services”. The infrastructure associated with on-premise computation can be used for supporting the average workloads of the organizations.

The flexibility of a hybrid cloud is enhanced by building a private portion of the hybrid cloud. There will be flexibility for the organization to use the cloud resources at a low cost. The scalability of a hybrid cloud is very good and hybrid clouds are also secure. The performance of hybrid clouds is quite good and they are reliable for the company using the cloud services. The cost of hybrid clouds is much lower than that of implementing a private cloud.

While planning for the implementation of a hybrid cloud identity management, the protection of data and compliance need to be considered carefully. It is advisable to have distinct credentials to access the resources of a hybrid cloud and necessary permissions [13]. Some of the challenges faced while implementing a hybrid cloud is the “public cloud platform throttle inbound queries” and this can be employed to make use of the same patterns and the same tools for processing the data in spite of not knowing the location where the application is present within a hybrid cloud. It is often cumbersome to secure and cover the servers that are present in separate environments in a hybrid cloud. Natural constraints should be used in a hybrid cloud order to access planned usage.

There can be difficulties for a particular hypervisor on which the company depends on while a public cloud is working with another hypervisor [7]. There are variations is hybrid clouds duwe to the variations in the public and pri9vate clouds built inside a hybrid cloud. The portability is also an important issue that should be considered in relation to a hybrid cloud as the movement of virtual machines and applications is possible in a hybrid cloud but the movement of metadata and the configurations can be complicated for different environments.

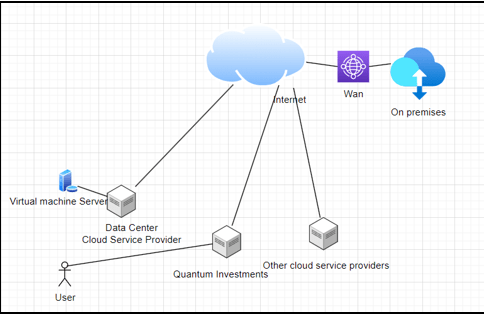

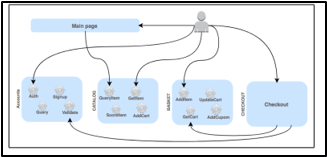

Figure 2: Design of the hybrid cloud model

The hybrid cloud that has been designed for the company Quantum Investments has been shown in the above diagram. According to the diagram, there is a Datacenter and there are various cloud service providers. The Cloud has been depicted in the diagram and the company has been represented in the diagram as a separate component. The users of the company Quantum Investments have been depicted in the diagram. A Virtual Machine Server has been depicted in the diagram and the on-premises have been shown in the picture. The on premises have been connected to the cloud using a Wan connection. This Hybrid model is ideal for Quantum Investments and helps to reduce the cost for implementing the infrastructure related to the cloud. The security of the data and the other resources are quite high when the infrastructure is implemented using a hybrid cloud and these are some of the reasons which justifies the design of a hybrid cloud for the company Quantum Investments.

FaaS is the acronym for Function as a service and in many studies it has been found that Faas is used as a computational model and helps to mitigate the challenges faced by various organizations. The internet business began to be used as a new business by the telecom operators after its emergence close to 1990. The internet connects people living in different parts of the world and provides a common platform for sharing opinions and ideas. Cloud computing has emerged as a “representative information business” [14]. FaaS has been used as an extra option alongside PaaS which is another model. The popularity of the FaaS model has risen due to the volume of data, temporal data characteristics, and variability of the platform.

Two scenarios were considered while conceiving serverless computing and one of them consists of applications which rely on outside services to operate and their business is focused on the client side. “Backend as a service” is the that was given to this model and there were various features of this model such as using outside services like databases, messaging services, authentication services, and others. The other scenario that was considered includes applications and their business rules present in clouds which run only on demand [15]. This model is called Function as a service. The FaaS model splits the service into micro functions and these functions can be scaled and carried out in an independent way. There have been many studies regarding the FaaS model and it is considered to be an alternative of the PaaS model.

Figure 3: A FaaS application

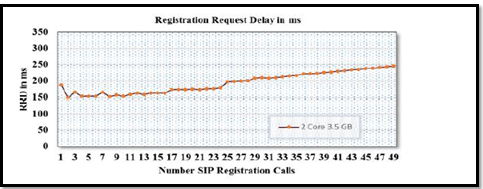

An application was developed with the use of the architecture of microservices comprising of two services which were used to provide geolocation data and receive the data. The first service called service 1 was used to store and receive data through “Hypertext Transfer Protocol Representational State Transfer requests” while the second service called service 2 is used for receiving the requests and providing the results in JSON form [4]. In this experiment “Amazon Web Services Elastic Beanstalk” is the PaaS environment and AWS Lambda is the FaaS environment and it is used to run an application that has been developed using Node.JS with the help of MongoDB database.

The operations that were performed include Write and Read where operations that target service 1 for the two environments are written and operations that target service 2 for the two environments are read. It is clear from the findings that the cold start of FaaS environments impacted the performance of applications which were executed in the FaaS environments. Such behavior can be seen in applications that have temporary lapses during the requisitions and this result in the resources being deallocated [3]. It has also been found that there is a positive impact on performance by allocating more resources. The relationship between memory amount and the resources allocated has been established. It has been found that the scalability of the PaaS and FaaS models was satisfactory for all the scenarios. The scalability of the PaaS model has been found to be instance based and it is found to occur in resource jumps. The scalability of the FaaS environment has been found to be linear and based on the volume of the events.

Figure 4: FaaS environment

There are some applications that do not benefit from PaaS features and for the sake of these applications the “permanent instantiation of the resources” has very little benefits even if the instances have been maintained alive and charges have been taken for these instances irrespective of their use [5]. The allocation of resources is done in a dynamic manner for the applications which show variations of workload and the FaaS model is suitable in such situations. FaaS and PaaS environments have variations in costs when the different scenarios are considered and they are dependent on the dominant operation.

The costs incurred due to the FaaS environment were greater for the first two scenarios. The cost involved with the FaaS environment has been found to be directly proportional to the time interval of the functions. By investing in the database performance and the caching features the interval of read operations can be reduced causing a decrease in the cost. Various FaaS Providers can offer a decreased cost and providers who are looking to promote their products and services in the market can offer lower prices.

Kubernetes is a way of supporting “container-based deployment” in a Platform as a service cloud. It is an alternative to the FaaS and PaaS environments and it concentrates on cluster-based systems [6]. The performance of Kubernetes is very good and is being used increasingly in recent times. A lot of study and research is being conducted in the area of cloud computing and this field is expected to grow in the future.

There are various organizations that have shifted to Hybrid cloud computing because of the security of the data that is stored in these clouds and large data is stored on such clouds. Many business organizations which consider securities as their priority have started adopting hybrid cloud and many services are provided by Hybrid cloud computing. If the business organizations want to change anything they can do it in the cloud without disturbing the existing system. Although there are many shortcomings of Hybrid cloud computing, numerous business benefits have been found in Hybrid Clouds. Organizations prefer Hybrid Cloud for the speed that can be achieved with Hybrid cloud computing. There have been researches regarding hybrid cloud and in order to improve the flexibility of the cloud services V cloud Hybrid Service has been recommended.

The rise of cloud computing has caused growth in research in parallel areas some of which make use of computational clouds for supporting various applications. The changes in the number of requests, using heterogeneous platforms, and the availability needs are some of the features of cloud computing environments which let gains in scale, availability, and independence needed for supporting recent applications alongside “microservice architectures” [16]. Even if PaaS is a recognized model, FaaS has emerged as an alternative for providing support to applications used in microservices. The resources are used efficiently by the FaaS model and the costs of implementation are comparatively lower. The cold start of FaaS should be considered before adopting the FaaS model since it can affect the application's performance. The scalability of FaaS is suitable with respect to services that are unpredictable whereas PaaS is applicable for “predictable workloads”.

In the future, there will be studies regarding the cost of implementation of FaaS environments from the perspective of a service provider. There will be research done to decrease the cold start in FaaS.

Thus, the cloud computing service that can be recommended for the company d Quantum Investments is Hybrid Cloud computing and the cloud design has been provided in the form of a diagram.

Conclusion

The web service of the company consists of a node js web service connected to a MySQL database server. . The company wanted to back up the servers using cloud service and in case of any problems at the location of its office, the virtual machines will be recovered.

The cloud is generally the next level of evolution of recent Internet technology. It mainly provides the various means by which the different things from computing-based power to the various business approaches to private collaboration are to be delivered to the customers significantly as a service whenever and anywhere it requires.

The storage of the cloud would be able to provide various storage services such as the blocks and the files relevant services, and the data cloud would be able to provide different data management-based services like recording, column, and also object-oriented services. Cloud computing is also providing a very convenient based platform that would reduce the various attached costs for the different equipment which are needed mainly for the processing of high amounts of data.

The different types of cloud provide distinct sorts of the resources such as processing, data storage, effective management services, and various application-based services. Thus the cloud is typically prepared by using various numbers of machines that are usually expensive. Hence, the cloud-based vendors could be able to add up more capacity and also be ready to quickly replace the applied machines when they fail. As compared with the machines that are used in the several laboratories, there are many variants of cloud computing such as Apache Hadoop, Microsoft's Azure, and Google-based apps.

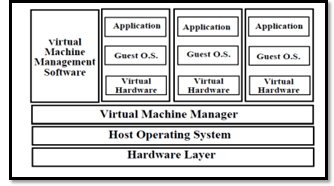

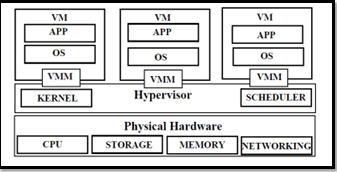

Virtualization is the technology that introduces some software-based abstraction of layers mainly between the usual hardware and the given operating-based systems along with the application which is running the top of them [17]. This kind of abstraction-based layer is also called the “virtual machine Monitor” or (VMM) and the hypervisor, the basic function of such layers is to hide much of their physical baked resources about the computing system mainly from their operating systems. This is due to the fact that hardware-based resources have been purely controlled by VMM but not by OS; therefore it would be possible to make runs of the various OSs in parallel mainly on a similar kind of hardware. Hence, such hardware-based platforms are then partitioned mainly as one or sometimes more than one of the logical units that are known to be as virtual machines or "Virtual Web Controller''. Resources-based services would be performing with the system along with the arbitration for the allocation of the different sources, and the user would also manipulate the various properties related to their virtual machines as well as networks, the monitors with the system-based components along with the virtual-based resources could be also manipulated.

One of the essential two kinds of hypervisor is the bare-metal or sometimes referred to as “type 1 Hypervisor”; such types of the hypervisor are directly installed, mainly their hardware attached to the physical part of the host machine. An essential form of hypervisor is "operating system-level virtualization" sometimes referred to as “type 2 Hypervisor”; this hypervisor is generally installed mainly as some program but in the host operating-based systems.

Along with the bare metal-based hypervisor, no operating system is present between host machines-based hardware and its server of virtualization related to software lawyer. This means certain bare-metal-based hypervisors would be taking the place of certain host-based operating systems allowing the various virtual machines to run on top of them simultaneously.

This is because the bare metal hypervisor would run directly in some host machines based hardware and also it would have direct access to the various available computing resources. The exact of the VM created along with operation though the bare metal-based hypervisor would logically take its portion reading the host machines based resources. It includes various things like processing-based power, memory as well as storage. Thus there would be no loss of the resources in hosting the operating t thus the VMs could be used in higher percentages for the various physical type resources about their server.

The bare metal would be providing many benefits slime of which are discussed below section.

It would allow several organizations to quickly build and then run their virtual machines.

This would provide the cost as well as energy efficient process to run the multiple-based VMs instead of hosting the various operating systems, particularly on the multiple servers.

It should ensure the operating system along with its application could run mainly on different types of hardware resources if they rely on various operating systems and specifically on the devices or the drivers.

The interaction with the different host-based computer resources like the CPU, its RAM, and also their physical-based storage.

It would eliminate the requirement of passing by the separate basis OS layers that would reduce the latency more often.

It would enhance the VM-based probability along with migration, and each of the VMs could be moved mainly to a different host-based computer depending on the networking, their memory, several storages, and also their requirement of the processing (Wang et al. 2020).

There are so many vendors which offer the various advances of bare metal-based hypervisors, and each of them would offer various configurations depending on the organization and its unused requirements. The features which are essential to consider are how many virtual-based machines would need for deployment so that their maximum percentages of resource-based allocation are needed for each of the VMs along with some either requirements as well.

Hence during the selection of bare metal baked hypervisor type solution, it's very important to research each of the vendors since many of the vendors would offer multiple kinds of products, different tools and so their various layers of licenses.

In the digital and computer world, virtual environments are often perceived similarly to real environments by application-based programs and users. However, the underlying mechanisms can differ significantly. Virtualization technology offers key benefits such as resource sharing and isolation. It is classified into several types, including full virtualization, paravirtualization, operating system-based virtualization, and hardware virtualization. Computer Science Assignment Help Understanding these concepts is essential for students tackling assignments related to virtualization, as it forms the foundation of modern computing systems and cloud technologies.

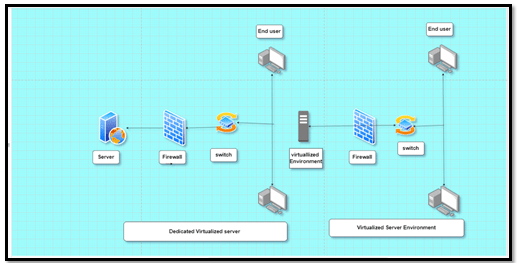

Figure 5: Drawing of Virtualized Server-based environment and dedicated virtualized server

The VM-associated creation often consists of several reservations about the resources in SRS, and its downstream-based request, particularly for the various VM creations. Generally, followed by some usual commitment to the resources mainly in the given SRS for success. Thus, the middle-related tier would be capable of controlling the different handling-based services like the creation, data modification, data interrogation, and the various tiers for the system as well as for user data (Lindvärn and Lundqvist, 2021). These users could query the services mainly to discover the various available kinds of resources and their information like the Disk images along with the cluster.

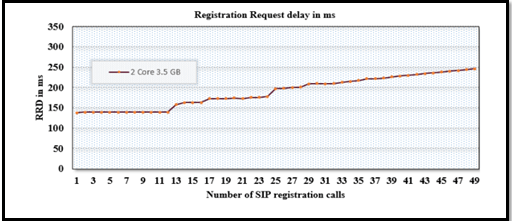

Figure 6: The performances of a dedicated server and its performances

Figure 7: Graph of Virtual web-based server performances

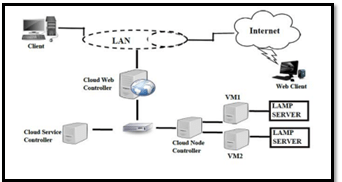

The resources-based services and their process for the user with virtual machines would control the various requests by which it could interact along with the “cloud web controller” mainly to create an effect to the allocation as well as a de-allocation for the physical resources. The simple kind of representation of such a “system resources state” is then maintained via communication mainly with the different cloud services-based controllers.

Figure 8: diagram Representation of the entire Virtualization

The different major merits of virtualization technologies are flexibility, their easy availability, usually scalability, some hardware utilization, security along with reduced costs, as well as adaptability mainly to the workload-based variation, load balancing, and some legacy applications considered of the various major types of advantages they could offer.

The primary role of SRS is to execute the various stages when any user is requesting for arrival, their information, particularly in SRS, is also dependent so making the admission control involves different decisions concerning the various user base specified and the services of their level expectations (Kouvatsos, 2019). Then the manipulation of the abstract type parameters like the key pairs of various security-based groups as well as the network definition is applicable for the virtual machine as well as the network allocations.

The resources-based interact mainly with different controller-based services by which theory would resolve the various references to provide some user-based different parameters like the keys which are associated along with the VM. But, such services are usually not considered static and configuration-based parameters. Therefore, the services that could manage the various networking, as well as the security groups on the data persistence, should act as the agents for altering the user request mainly to modify the various running collection regarding the virtual machines and to support the cloud-based networks (Banaie et al. 2020). The initialization of the CPU is generally a key performance-based metric, This could be used mainly to track the various CPU performances of the regression and their improvements, hence it is useful for the data points mainly for the various problems related investigation of the performances. The essential concept of this CPU initialization is used mainly to have some single-based core processor which is fixed at the frequency of about 2.0GHz. Thus CPU utilization is considered the percentage of the required for the processor for doing some work. After the test have been performed mainly on cloud computing, there is more interesting to determine the cost of such private-based cloud mainly for certain companies (Park et al. 2020). This would require the material required to be often virtualized by the numerous m machines along with their prices which they would cost. Ultimately, it is essential to evaluate the energy and resources economy because of application of cloud computing would permit mainly the usage of virtualization in reducing the various kinds of energy consumption.

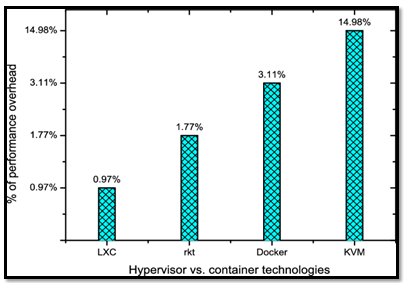

The hypervisor is the most important based component of virtualization environments. The hypervisor is the program that permits multiple types of operating systems such as the guest to run the virtual machines but in an isolated manner and hence shares the single by physical-based machines or the hosts.

Figure 9: Virtualization in cloud computing (Hypervisor and Container technologies)

The management for the apparel kind of operating system is mainly on the machines, the virtualization layer would be added among the hardware and their operating system. Such virtualization-based layers mainly allow the various operating systems to run concurrently many within the given virtual machines but in the operating system-based computer, they're usually dynamic-based partitioning along with the sharing of the various physical based resources like the CPU, their storages, and the memory along with the I/O devices. The VMs are acting as the resources significantly for service-based architectures and also could be accessed through the web services which are usually present in the given interfaces.

Figure 10: The representation of the virtual environments

From the above context, it would be observed that as in the cases of the various public-based clouds the resources are provided very dynamically whereas in the case of the private-based cloud services their resources are mostly provided on the demands. The reliability of such privately based clouds is more. But in this study, the users have provided some comparative-based study along with CPU performances for the public cloud as well as the private cloud mainly by the developments of cloud-based platforms such as my cloud (Banaie et al. 2020).

This system design is generally divided into the five segments such as network design, my cloud-based 11.0 application, software-related components, various web applications, and workload-relevant generation.

Figure 11: Testbed for experiment design

The private-based network was also developed by using various workstations mainly to make establish some heterogeneous kind of cloud, particularly on LAN. This proposed type of framework is usually used mainly for cloud establishment along with the cloud web-based controller, the cloud service and controllers, and finally, the cloud-based node controllers.

The QEMU is usually a fast and open source-based machine or emulator that could also simulate multiple hardware and architectures. This QEMU would let run the truly unmodified type operating system like the VM guest mainly on the provided top of the given system, that is the VM-based host server. The QEMU generally comprises many parts such as the processor of the emulator, their emulated related devices like the graphic card network cards and also hard disk, debugger, etc.

The containers are lightweight and often more agile for handling various virtualization. But they are not using the hypervisor, and users could also enjoy the faster type of resources along with provisioning as well as the availability of new applications. The containerization-based packages are used altogether for running the single application and the micro services but with the runtime-based library, they would need to run often. These containers consist of all such codes, their dependencies and so the operating system. Hence this would enable the various applications for running anywhere such as desktop, a decent computer, and also in the IT infrastructures with the cloud. Instead of the various virtualization mainly their underlying forms of hardware, this container would virtualized the various operating system such as Linux and windows by which each of the individual containers would containing the application along with libraries or dependencies, these containers are usually small, but fast as well as very portable since the virtual machines, the containers are not usually required to include the various OS for instance and could then instead of leveraging the various features along with the resources for the hosting OS. Thus like virtual mechanics, this container also allows the various developers to improve the CPU and its memory to utilize the physical machines (Zhang et al. 2019).

The containers also enable their micro services-based architectures by which the various application-related components could be often deployed and then scaled up much more easily and granularly. The container-based application also provides the different teams with flexibility on what they would need to handle the various software-based environments related to the modern IT system. These containers are best dealt with mainly for their automation and also to develop the pipelines that consist of the continuous baked integration as well as the continuous based deployment such as CI/CD related implementations. The large enterprise-based application could also include a huge amount of containers and their management would present various severe kinds of issues mainly for their teams. The containers also provide the best level of flexibility along with portability which is perfect mainly for the various multi-cloud of the world.

Conclusion

The cloud usually means the kind of infrastructure that provides various resources as well as services across the internet. This computing cloud essentially provides several significant computational-based services. The cloud-attached infrastructures are designed in such a way that they must store and execute the data mining along with large scattered data and distributed forms of data which are connected usually along with some high and effective performances based on a wide area of networks.

References

Introduction Get free samples written by our Top-Notch subject experts for taking Assignment Helper services. The...View and Download

Introduction Get free samples written by our Top-Notch subject experts for taking online Assignment...View and Download

Introduction Get free samples written by our Top-Notch subject experts for taking Assignment Help UK services. The...View and Download

Introduction Get free samples written by our Top-Notch subject experts for taking online Assignment...View and Download

Introduction Get free samples written by our Top-Notch subject experts for taking assignment help services. An energy...View and Download

Introduction - Contemporary Issues in Business Management Rapid Assignment Help delivers excellence in education with tailored...View and Download

Copyright 2025 @ Rapid Assignment Help Services

offer valid for limited time only*